Keeping Lightroom Classic’s Files in Check: Part 2

Wrapping up last week’s post with some more tips for keeping Classic in control.

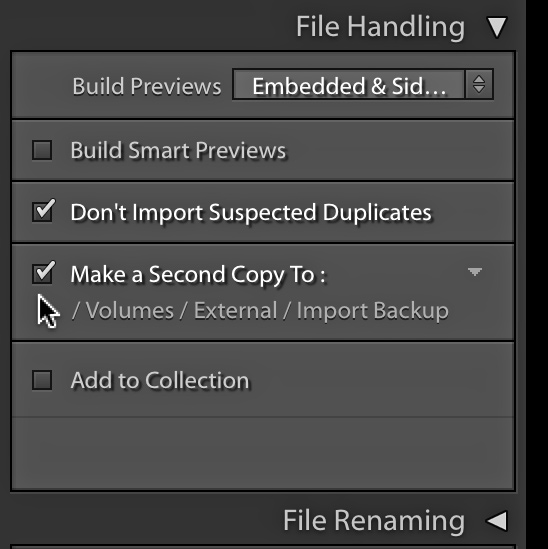

Managing Import Backup Copies

We left off last we speaking of automated back up processes that are left to the user to manage, the Make a Second Copy To option in the File Handling panel of the Import dialog is another potential disk space hog.

This is a very well intentioned checkbox whose purpose is to allow you to copy the contents of your memory card to two different drives at the same time as a function of the import process. This is a very handy feature if your regular full-system backup function only runs once a day (or perhaps less frequently), and you want to duplicate the new photos immediately so that you can format your memory card sooner and return it to service.

That is all well and good, but it is important to know that Lightroom has absolutely no continued connection to this second copy of photos it creates. It won’t apply develop presets to the second copies, iterative copies created via Edit in Photoshop aren’t included here, and Lightroom won’t update the second copies in any way after you start working on the originals in Lightroom. Management of those second copies is entirely in your hands. This second copy is essentially a backup of your memory card at the time of import, and is forever frozen in time. I’ll assume you already have a full-system backup plan that includes your working photos and all the changes you make to them over their lifetime. This additional “second copy to” backup of your memory card is something you need to actively manage so that it does not needlessly waste drive space.

Since Lightroom never changes your source files, and you have a full system backup (and maybe an offsite backup too), do you also need to keep a backup of every memory card for all eternity too? (If you do, it’s nice to have this option) Redundancy in your backup is great, but it should be logical and managed redundancy. I periodically delete the old second copies once my full system backup has run to include the new arrivals.

Previews

The next item to consider is the regular preview cache that exists alongside the catalog file. For every photo that goes through the import process a collection of preview files are created and stored in this cache folder. The goal of the cache is to speed up Lightroom’s performance by only having to display these previously rendered (with Lightroom’s settings) previews instead of having to re-render from the source file each time, and to also allow for a certain level of offline viewing, such as when an external drive is disconnected. The preview cache is not an essential file, as Lightroom can (and will) re-render the previews as needed if they go missing, and so it is not included in Lightroom’s catalog backup process. You might consider excluding it from your full system backup process too if that is useful to you.

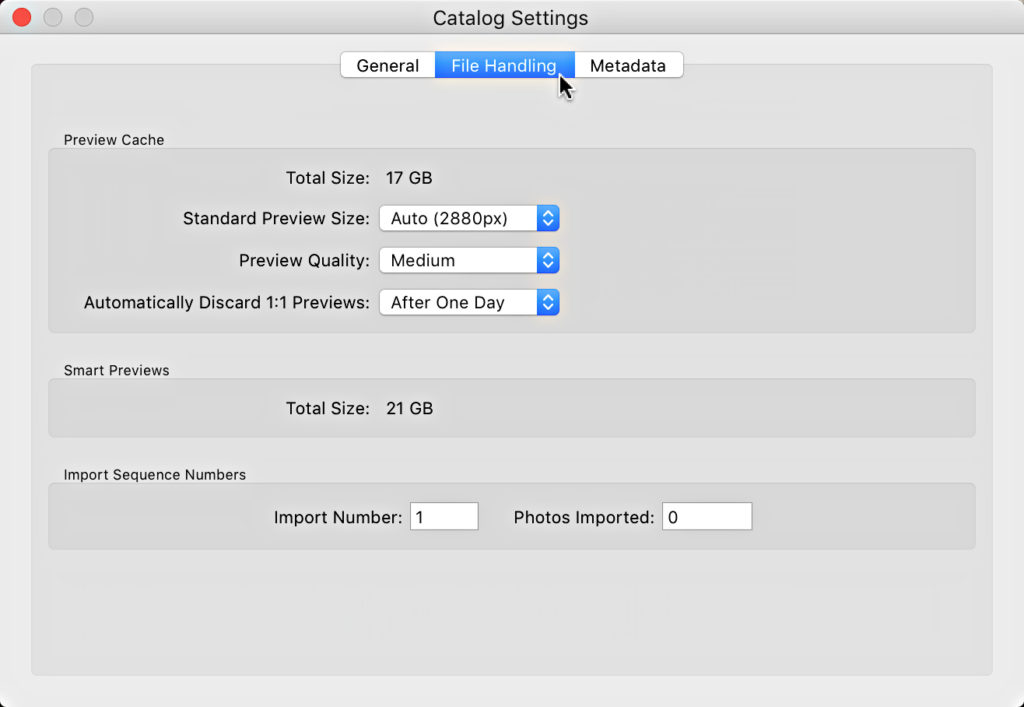

There are a couple of user-configurable settings that can affect the size of the preview cache, and these can be found on the File Handling tab of the Catalog Settings dialog box.

You can think of this preview cache as a folder of JPG images, and as such the two factors that affect file size are pixel dimensions and the amount of JPG compression. The Standard Preview setting is what controls the pixel dimensions of the previews that you see in all areas of Lightroom (except the main Develop window and when you zoom in to 1:1 in Library), and come in a range of sizes. The Auto setting is intended to automatically configure the largest size of the standard preview to match the screen resolution of your display device, and is a set it and forget it type of setting. The Preview Quality setting controls the amount of compression, and Medium is a good compromise between file size and quality.

Full sized previews, referred to as 1:1 previews, are also stored in this cache, and these are created any time you zoom to 1:1 in Library or if you choose the 1:1 preview option on the Import dialog. You can also manually force them to render via the Library > Previews > Build 1:1 Previews menu. These are the previews that can really eat up some disk space over time. If you look on the File Handling tab of the Catalog Settings you’ll see an option for scheduling when to discard old 1:1 previews, and there is also a manual method to discard under that Library > Previews menu. However, the logic behind the actual removal of the full sized previews is a little more complicated than that, so what I do is simply delete the entire Previews.LRDATA file once a year or so and force Lightroom to re-render a fresh set of standard previews. I know it sounds extreme (and I’m NOT saying you need to do this), but there’s no better way to remove all the detritus that builds up over time, and all it costs is the time it takes Lightroom to re-render them again (your photos will need to be accessible to the catalog to have the previews re-rendered, so make sure you don’t have any missing/offline files before doing this).

Smart Previews

Aside from the regular previews we also have the option to create and use Smart Previews. Like the regular previews this folder exists alongside the catalog file, but unlike the regular previews using Smart Previews are entirely optional. Discussing the ways to use Smart Previews could be an entire article itself, but suffice it to say that using Smart Previews gives us the ability to have a very powerful and lightweight workflow when our photos are offline. If you refer back to the File Handling tab of the Catalog Settings dialog you can also see that it displays a running tally of the size of this cache. The size will vary based on how many Smart Previews you have built. In my case the cache is 21 GB, which represents almost 21,000 Smart Previews.

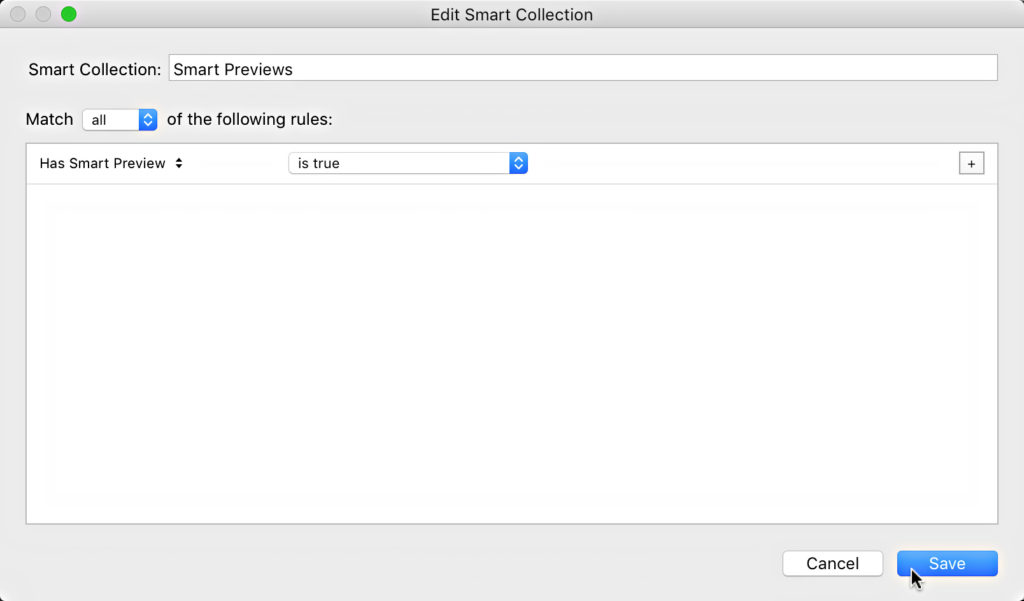

You can manage your Smart Previews by discarding the ones you no longer need. An easy way to track photos that have a Smart Preview is to create a Smart Collection using the rule Has Smart Preview is True. This automatically gathers up all photos having a Smart Preview, then you can select the photos you want to discard the Smart Preview for in Grid view and go to Library > Previews > Discard Smart Previews, where you will be prompted to confirm this action by clicking the Discard button.

Seems sane. I write to XMPs by default, and copy those to the “Second copy” on another drive folder weekly. If I lose my primary drive, I can then import and have most editing preserved. (I don’t use collections, and only a few virtual copies). not real sophisticated but it’s something fast and dirty with some value.

Bob, why not use software that intelligently clones the contents of the drive containing your photos to a backup drive? That way you have all your working files in 2 places in precisely the same state? This software can be scheduled to run, and only backs up new and changed files, which makes it much faster than manually copying and pasting.

If I understand your workflow, then you are relying on the second copy as the longterm backup. If that is case, then you are not backing up any iterative copies made in Photoshop (or other programs). While you are copying/pasting XMP files, it assumes you never rename your photos after import, because if you do, the XMP you copy over won’t match the second copy. As you stated, you lose virtual copies, and collection membership, which you don’t make much use of, but you also loose all history steps and all flags. You can live without those too, but just keep that in mind.

The worst aspect of the scenario you describe would be having to reimport everything from scratch. Yes, you’d have your latest develop adjustments, but you’d have to go through and re-delete any photos you already deleted the first time, and you’d lose all your iterative copies (PSD, TIF).

If you simply clone your working drive on a regular basis, you can schedule it, and it will include all file name changes and iterative copies made after import. As long as you are backing up your catalog, you don’t need the XMP files, but it is fine as a second security blanket. Best of all, you don’t have to reimport photos and redo all that work.

With LR 7.2 coming, imports will be massively faster so it’s a lesser issue. lol Having files I’d deleted is a livable problem as long as I have the ones I wanted too, but you’re correct of course.

Nothing you note isn’t legit and good practice, especially for someone self-contained who makes $ from their photography – which I don’t. I do image, I’m talking about an extra level – files on backups get corrupt too – and why below.

I’ve been responsible for petabytes of commercial data and hundreds of servers and thousands of PCs at a time (from both administrative and managerial/director levels). I’ve signed the PO for over $1M for backup system components and software I think 8 times now…

There is also risk in trusting image / clone files, CD’s, drives…

now matter how much luck you have with a backup vendor or your well thought plans. Things can go bad… vendors disappear, products get cancelled right when you need support…been there and experienced it…

I keep a raw copy of core directory level files/data in quick and dirty formats somewhere too. Keep install media etc. I replace drives every 2 years (I did it for all business laptops too)… servers, 3 years..)

Well-thought, quick & dirty , close enough & done is better than perfect sometimes in a fallback mode. Speaking from lots of professional experience. Having data far removed from any vendor specific file formats can be a virtue – especially if you change vendors and no one wants to write you conversion software ;

My mentioned copy of xmps was the last stash of such. And I think I’ll add the (few) PSDs and TIF (more) to the selection with the XMPs.

Ok, you’ve clearly thought it all out. I will say for clarity, that I was not suggesting any solution that would tie your backup data to any vendor. At least no more than you are by using XMP files. May you never have to face such a situation in any case! 🙂